The Pennsylvania State University, Spring 2021 Stat 415-001, Hyebin Song

Probability Review

Probability ReviewElements of probabilityRandom variablesDistribution functionsExpectationVarianceSome common random variablesTwo or more random variablesDistribution functionsConditional distributionsIndependenceExpectation and CovarianceMoment generating functionsSome limit theorems

Elements of probability

Sample space : The set of all the outcomes of a random experiment.

Event: Any subset of the sample space . In other words, a collection of some possible outcomes

Probability: A function that takes an event as an input and returns a value from as an output, which satisfies the following three properties (Axioms of Probability).

- For any event , .

- .

- If are disjoint events, then .

Example Tossing a fair coin twice.

Sample space =

Event : the event of having two heads , the event of having at least one tail , the event of having a head on the first toss , etc.

Random variables

A random variable is a variable whose outcome is determined by a random experiment.

- More formally, a random variable is a function which takes an element of the sample space as an input and returns a real number.

- We denote random variables using upper case letters , or simply .

Events can be defined in terms of random variables:

For example, the following are events:

- for a number

- for

Therefore it makes sense to write or .

More formally,

- . Similarly,

- .

Also note, for any constants with .

Example Tossing a fair coin twice. Let be the number of heads. Compute and ).

Sample space =

.

The event that is the same as the event that .

Therefore .

We often use the indicator variable of an event , , which takes if happens and otherwise.

For example, is a random variable which takes if and otherwise.

Class Question: what is the distribution of the random variable ?

Distribution functions

A (cumulative) distribution function of a random variable is a function such that

It is often easier to handle the pmf or pdf than the cdf, where

a probability mass function (pmf) when is discrete,

a probability density function (pdf) when is continuous.

is a function such that for any ,

In particular,

for a small .

cdf pmf/pdf

Some pmfs or pdfs have names!

Remark We can compute any probability involving if we know the cdf or pmf/pdf of .

We specify the distribution of if we specify the cdf or pmf/pdf of .

- Notation: or

Example

- .

- .

- .

Three main quantities of interest

For a random variable and any function , three main quantities of interest are

- , average location of

- , average spread of

Expectation

For any random variable with pmf or pdf and any function ,

- if discrete

- if continuous

Remark: The expectation of can be thought of as a "weighted average" of the values that with the weights or .

Properties

- for any constant .

- for any constant .

- (Linearity of Expectation)

Exercise We toss an unbiased coin twice. Let be the number of heads from two tosses, and let (the indicator variable for ). Find .

We have where .

Therefore, .

Variance

For any random variable and any function ,

Remark: The variance of a random variable is a measure of how concentrated the distribution of a random variable is around its mean .

Properties

and for any constant .

for any constant .

Exercise We toss an unbiased coin twice. Let be the number of heads from two tosses, and let . Find .

We have .

Some common random variables

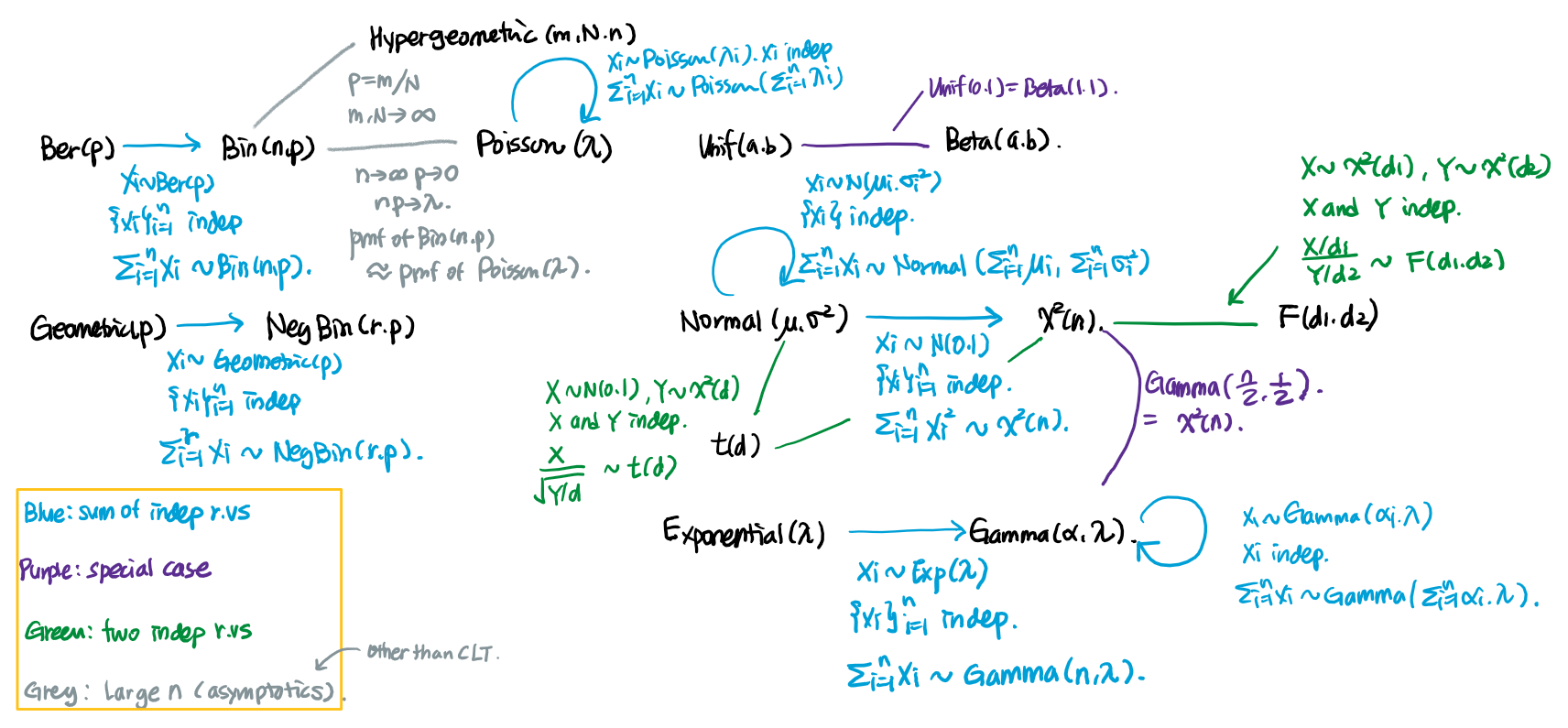

- Discrete random variables: Bernoulli(p), Binomial(), Poisson(), Geometric(), NegBin(), ...

- Continuous random variables: Normal(), , Unif(), Beta(), Exponential(), Gamma(), , , ...

Example The trunk diameter of a pine tree is normally distributed with a mean of cm and a variance of cm. Given that the mean of the trunk diameters of 100 randomly selected pine tree is greater than 156 cm, calculate the conditional probability that the sample mean exceeds 158 cm.

Let be the trunk diameter of the ith selected pine tree. The question is

.

By definition,

Also we have,

Therefore, , and

The answer is .

Two or more random variables

A random vector is a collection of random variables.

Examples

- is a random vector of size .

- is a random vector of size .

Distribution functions

A joint (cumulative) distribution function of a random vector is defined by

Remark: by knowing the joint cumulative distribution function, the probability of any event involving can be calculated.

As in the univariate case, the distribution of a random vector is completely specified if we specify the joint distribution function.

Alternatively, we can specify the joint pmf (discrete ) or pdf (continuous ).

Joint pmf : .

Joint pdf : is a function such that for any ,

In particular, when ,

Joint pmf : .

Joint pdf : is a function such that for any ,

The pmf/pdf of each (a.k.a the marginal pmf/pdf) can be obtained by summing/integrating over other variables.

For example, when ,

- Marginal pmf :

- Marginal pdf :

Conditional distributions

Suppose we have a random vector . The conditional distribution of given tells us the probability distribution of when we know that takes a certain value .

Similarly as before, the probability distribution is completely specified if we know the cdf or pmf/pdf. The cdf or pmf/pdf will usually depend on .

When , are discrete, the conditional pmf of given is

for such that .

When , are continuous, the conditional pdf of given is

for such that .

Example Toss an unbiased coin once. = number of heads = number of tails. Compute the joint pmf and conditional pmf of given

Recall the definition

p(x,y) x=0 x=1 y=0 p(0,0)=0 p(1,0)=1/2 y=1 p(0,1)=1/2 p(1,1)=0 We have,

Therefore,

Independence

Random variables are (jointly) independent if

for all values of . Equivalently,

- For discrete random variables, .

- For continuous random variables,

Example We have a random sample of size , , such that each follows a Poisson distribution with parameter . Write the joint pmf of .

Expectation and Covariance

Suppose we have two discrete random variables ,. In addition to measuring the average location and spread of or , we can measure the covariance of and .

Covariance is a measure of linear dependence between and , defined as

Properties:

for any function and such that ,

(Linearity of expectation)

.

, for any constants .

.

If and are independent, then

- does not imply that and are independent. On the contrary, if , then and are not independent.

If and are independent, then .

Example Toss an unbiased coin once. = number of heads = number of tails. Compute .

We have,

, . .

.

Therefore,

.

Moment generating functions

For a random variable , the moment generating function of the random variable is defined for all by

Similarly, for a random vector , the moment generating function of the random vector is defined for all by

One of the most important results regarding moment generating functions (mgfs) is the following uniqueness theorem, which says that random variables from the same probability distribution have the same moment generating function.

Uniqueness Theorem Let and be two random variables with mgf and . If for all for some , then and have the same distribution.

Remark Moment generating functions characterize distributions. Similarly as in the case of pmf and pdf, by looking at the moment generating function of , we can identify the distribution of .

- For example, if the mgf of is , , then by comparing with mgfs of random variables from known distributions, we can identify that follows a Poisson distribution with parameter .

Example We have a random vector , i.i.d., where each , for , is distributed as , i.e., . Find the distribution of .

Let . We want to compute the mgf of .

Since are independent,

Therefore, .

Some limit theorems

Weak Law of Large Numbers (WLLN)

For i.i.d., with , such that ,

for any , we have,

In words, with high probability, the sample mean of i.i.d random variables is close to the population mean.

Central Limit Theorem (CLT)

For i.i.d., with , such that ,

for any $, we have,

where is a cdf of a standard normal distribution. Equivalently,

where .

In words, the distribution of the standardized sample mean or sum is close to the normal distribution.

We also write (1) and (2) as

or equivalently,